In recent years, the proliferation of Machine Learning (ML) applications across various industries has led to the emergence of a new field known as ML Ops, short for Machine Learning Operations. ML Ops is a set of practices and tools aimed at streamlining the deployment, management, and scaling of machine learning models in production environments. This essay explores the significance of ML Ops in the context of modern data-driven organizations, its key components, challenges, and best practices.

The Significance of ML Ops

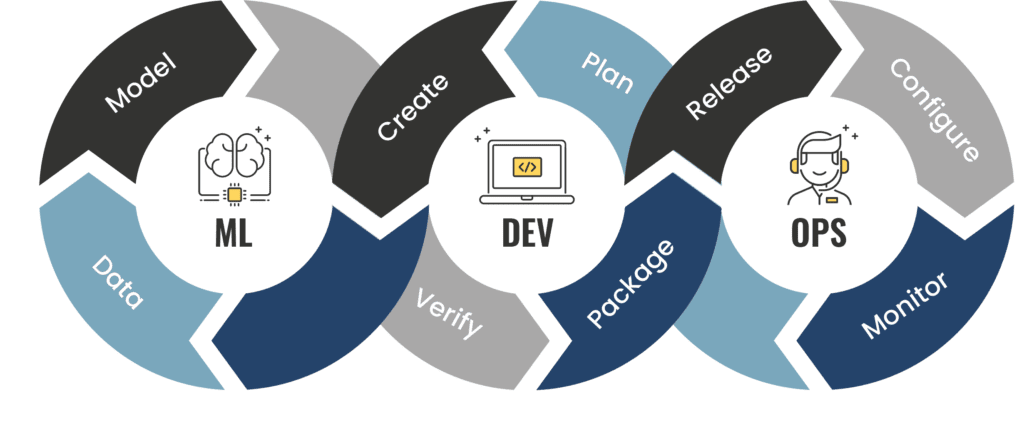

Machine learning models play a pivotal role in enabling organizations to derive actionable insights, automate processes, and enhance decision-making. However, the journey from prototype to production involves numerous challenges, including data management, model deployment, monitoring, and maintenance. ML Ops addresses these challenges by applying principles of DevOps, automation, and collaboration to streamline the end-to-end machine learning lifecycle.

ML Ops ensures that machine learning models are deployed efficiently, scale seamlessly, and deliver consistent performance in production environments. By automating repetitive tasks, enforcing best practices, and fostering collaboration between data scientists, engineers, and operations teams, ML Ops accelerates the time-to-market for ML applications and maximizes their impact on business outcomes.

Key Components of ML Ops

ML Ops encompasses a diverse set of practices and tools designed to manage the entire machine learning lifecycle. Key components of ML Ops include:

1. Model Development: ML Ops begins with model development, where data scientists explore, preprocess, and train machine learning models using libraries like TensorFlow, PyTorch, or scikit-learn. Version control systems such as Git facilitate collaboration and reproducibility by tracking changes to code, data, and model artifacts.

2. Model Deployment: Once a model is trained and validated, ML Ops focuses on deploying it into production environments. Containerization platforms like Docker and orchestration tools such as Kubernetes enable seamless deployment and scaling of machine learning microservices. Continuous integration and continuous deployment (CI/CD) pipelines automate the process of building, testing, and deploying models, ensuring rapid and reliable releases.

3. Monitoring and Logging: Monitoring the performance of deployed models is crucial for detecting anomalies, ensuring reliability, and maintaining quality of service. ML Ops incorporates monitoring and logging solutions that track key metrics such as prediction latency, throughput, accuracy, and drift. Tools like Prometheus, Grafana, and ELK stack provide real-time insights into model behavior and enable proactive remediation of issues.

4. Model Governance and Compliance: ML Ops emphasizes the importance of model governance and compliance to mitigate risks associated with bias, fairness, and regulatory requirements. Model governance frameworks establish policies and processes for model evaluation, approval, and documentation. Explainable AI techniques and fairness metrics help identify and mitigate biases in models, ensuring ethical and accountable AI deployments.

Challenges in ML Ops

Despite its benefits, ML Ops poses several challenges that organizations must overcome to realize its full potential:

1. Complexity: Managing the end-to-end machine learning lifecycle involves dealing with diverse technologies, tools, and stakeholders. Integrating disparate systems, ensuring compatibility, and orchestrating workflows across teams require careful planning and coordination.

2. Scalability: As organizations deploy an increasing number of machine learning models in production, scaling infrastructure, and managing resources become challenging. ML Ops must support elastic scaling, auto-scaling, and resource optimization to handle fluctuating workloads efficiently.

3. Data Management: Data is the lifeblood of machine learning, and effective data management is critical for successful ML Ops. Ensuring data quality, lineage, privacy, and security throughout the data lifecycle requires robust data governance frameworks and compliance measures.

4. Talent Gap: The shortage of skilled ML engineers, data scientists, and DevOps professionals poses a significant barrier to adopting ML Ops. Organizations must invest in training, upskilling, and talent development initiatives to build a proficient ML Ops workforce.

Best Practices in ML Ops

To overcome these challenges and maximize the benefits of ML Ops, organizations should adopt the following best practices:

1. Collaboration and Communication: Foster collaboration and communication between data science, engineering, and operations teams to align objectives, share knowledge, and streamline workflows.

2. Automation: Automate repetitive tasks, such as model deployment, testing, and monitoring, to increase efficiency, reduce errors, and accelerate time-to-market.

3. Standardization: Establish standardized processes, tools, and best practices for model development, deployment, and maintenance to ensure consistency and repeatability.

4. Continuous Improvement: Embrace a culture of continuous improvement by soliciting feedback, monitoring performance metrics, and iteratively refining ML Ops workflows.

Looking for your next rockstar hire with the most cost-efficient structure? Solvedex can help.

ML Ops plays a pivotal role in bridging the gap between machine learning and operations, enabling organizations to deploy, manage, and scale machine learning models effectively in production environments. By integrating practices from DevOps, automation, and collaboration, ML Ops streamlines the end-to-end machine learning lifecycle and maximizes the impact of ML applications on business outcomes. While ML Ops presents challenges, organizations can overcome them by adopting best practices, fostering collaboration, and investing in talent development. As the demand for AI and machine learning continues to grow, ML Ops will become increasingly indispensable for organizations seeking to harness the power of data-driven insights and innovations.